Welcome back

In this post we will talk about how to extend your existing S2D cluster and what to think about when extending a S2D cluster.

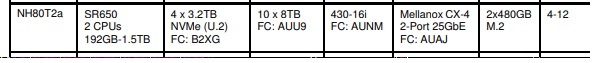

In my previous post we built a 4 Node S2D cluster based on the following HW

What is our curent cluster built on

4 x Lenovo SR650 nodes

Intel Gold 6144 CPU

1536GB Memory

4×3.2TB NVME

10x8TB HDD

Mellanox ConnectX CX4 25Gbit nic

This gave us 64 pCores, 4.5TB of usable memory and about 96TB of usable storage. We also specked in a Lenovo NE2572 switch giving us 25Gbit for networking, giving us a decent fast performing S2D cluster. With the CPU being the limiting factor we should hit about 1.6 Million IOPS in pure 4k read performance on the 4 node cluster.

In this scenario the client has said that they will be increasing the load on the cluster with more VM’s as they have acquired a new client that will have them add 150 new virtual machines, that is more CPU heavy, use about 1.4TB of memory, and requires about 40TB extra storage. When adding nodes to the cluster we are limited about what we used on the first 4 nodes.

Current resource usage

391 Virtual Machines all Windows Servers

2.75 TB Memory

1945 Virtual CPU’s

79 TB storage Usage

New Resource Usage

541 Virtual machines

4.15 TB memory

2565 vCPU

109TB storage

What to think about when extending the cluster

The important thing when extending a S2D cluster is to use “same” HW. Meaning should have same cpu, mem, nic’s, disks. When it comes to the disks we can use other brands, but they need to be same size or bigger. The preferred is to use identical disks. But you can use a Intel P3700 and Intel P4600 in the same cluster as the Intel P3700 is no longer available. But you will need to use same size or bigger. Same with Capacity drives, they need to be same size or bigger to be able to be used as available storage in the cluster.

Extending the cluster

First we need to calculate if we can achieve this by adding 1 or 2 nodes.

Memory

Here we are ok as we have more memory in the 4 node then what we will be using after adding the new client.

Storage

With the new requirements we are 13TB short with just the 4 node setup so we need at least 1 more. If we add an extra node we will increase the storage with 26TB usable and we will get to 123TB of usable storage. We will then have 14TB of free space in the pool to grow with. We also have about 1.8TB of free mem in the cluster for existing and new VM’s.

Compute

With the extra node we are also giving each VM some more physical CPU to play with as the adding of the 150 virtual machines will require some more CPU then the previous VM’s in the cluster. We will increase to 80 pCores and 160vCores. Giving us a 16 vmCpu’s pr vCore on the CPU. With the 4 node we had about 15.2 vmCPU’s pr vCPU. But the cluster will handle this fine.

So we end up buying a new identical node with the same CPU, mem, cache and capacity drives and so on.

Adding the new Node

First start by configuring the node with the os and all the related config so it’s ready for being added to the cluster. Once that is done run the Add-Clusternode on a node in the cluster, and it will add the node to the cluster and claim all eligible storage to the cluster.

If you are not already doing it, it’s important to start monitoring your CPU usage and see if the CPU is being strained, as we are now running 100+ vm’s pr node.

Some S2D Tips

The important thing here to note is to try and balance the Virtual Machines and Cluster Shared Volumes on the same node, to limit storage IO so that the CPU can do what it’s supposed to do, give resources to the VM’s. Remember that if VM is on node 1 and CSV is on node 2 all storage IO must go through node 2 before it can be written

When creating the new volumes try and use the same size and shape as the previous volumes. This will make it easier on the S2D cluster. And it’s very important to keep volumes as low as possible in case you need to run a DataIntegrityScan on a volume.