Hey Everyone, been a while since my last post. But i have a good one for you today.

A client had been struggeling to replace a failed capacity disk to a 2019 Azure Stack HCI cluster and wanted help sorting it out.

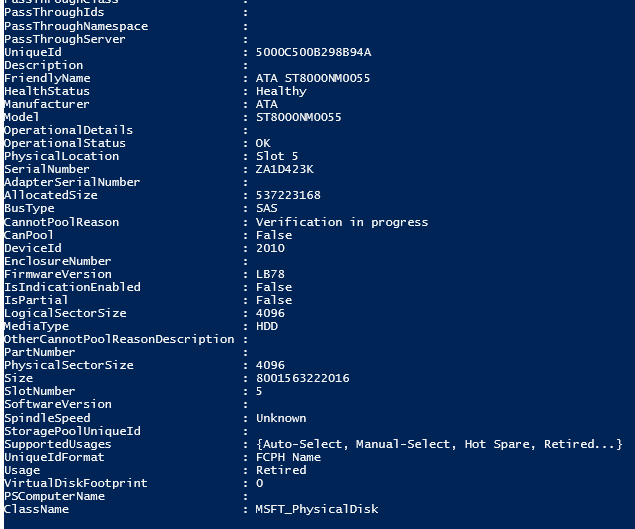

When i logged in the Disk had been partly “claimed” by the storagepool but was struggeling to verify the disk.

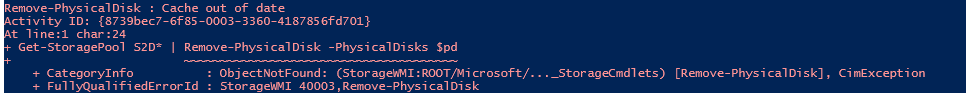

And it was stuck on this Verification undefinitly. When trying to remove the disk i got Cache out of date.

So we could not remove the disk. Even Reset-Physicaldisk was not working.

During testing we had thought perhaps it was a DOA disk so we got another disk to try. With the excact same result.

At this point MS support was involved and the new Dedicated AzHCI team even tho this was a 2019 installation they are working some of these cases, as this is Lenovo MX certified nodes they created the ticket and got the case transfered to the right team.

We started troubleshooting and i got alot of good troubleshooting and reset commands for the disks, like

Clear-PhysicalDiskHealthData wich you can download from here

This did not work either

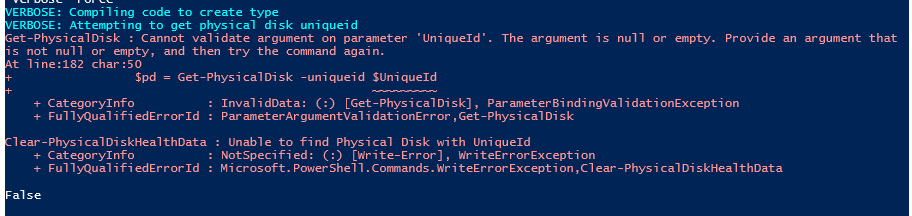

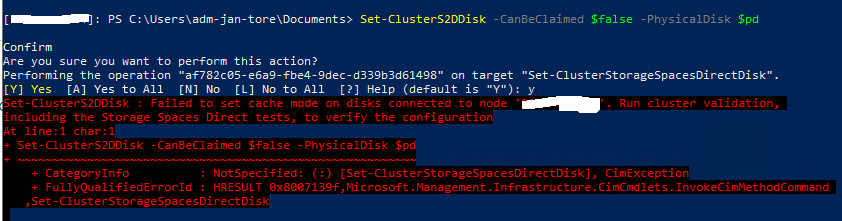

The next thing we tried was the change the claim properties of the physical disk.

# First Reset the Physical Disk, this will retry the claim operation $pd = Get-PhysicalDisk -DeviceNumber 2010 Set-ClusterS2DDisk -Reset -PhysicalDisk $pd # Let's set the claim to be false ClusterS2DDisk -CanBeClaimed $false -PhysicalDisk $pd #Next clear the drive with clear-disk $disk = get-disk -Number 10 $disk | Set-Disk -IsReadOnly $false $disk | Set-Disk -IsOffline $false $disk | Clear-Disk -RemoveData -RemoveOEM #Then let's set the claimed back to true $pd = $pd | Get-PhysicalDisk Set-ClusterS2DDisk -CanBeClaimed $true -PhysicalDisk $pd

Now by running this command it failes on the set the claimed to $false

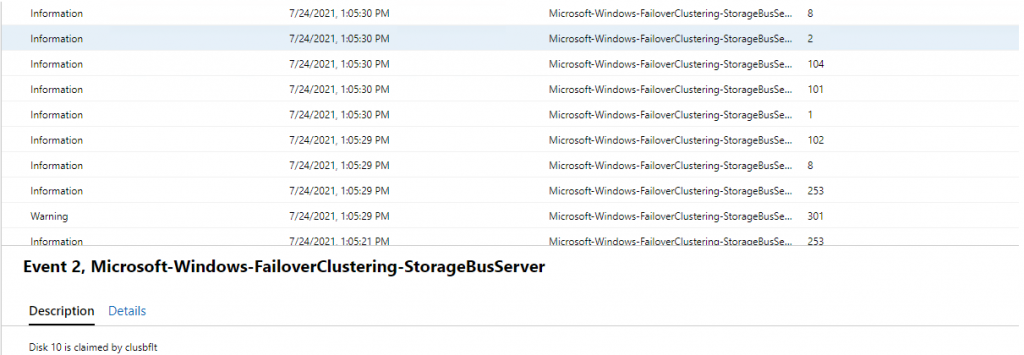

This was very strange and should not realy happen. When looking at the disk it was still saying Verification in Progress. We started now looking at event logs for the storagebus.

We saw that the disk was claimed by the cluster storagebus clusbflt

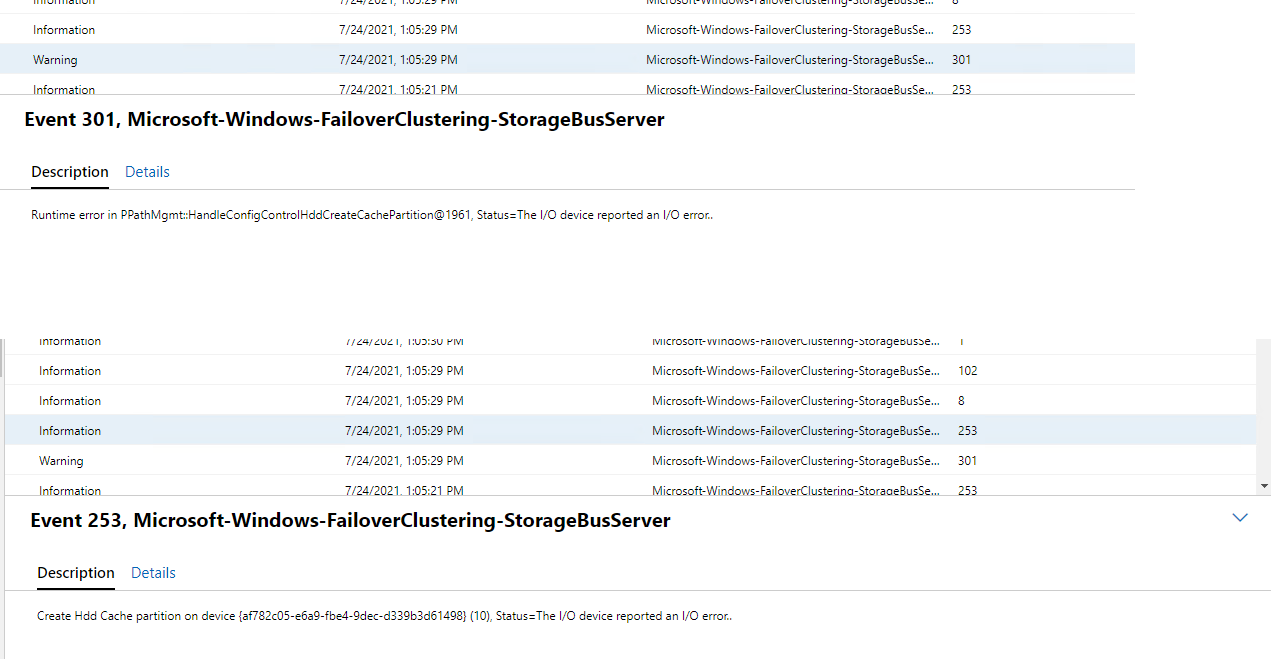

But just seconds before we got event ID 301 and 253

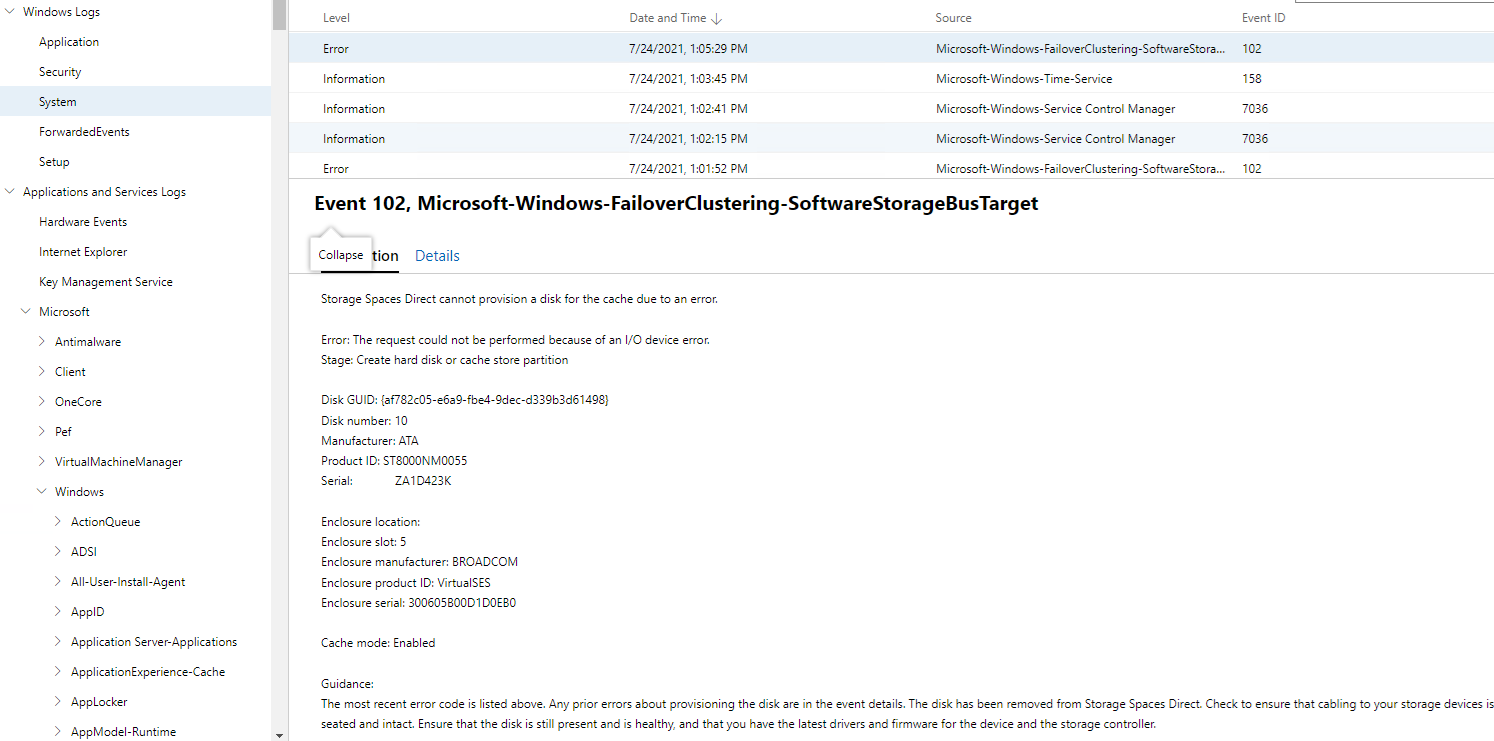

Indicating a I/O Error when trying to claim the disk. At the same time there was a System Error event logged, Event ID 102 saying that Storage Spaces Direct cannot provision a disk for the cache due to an Error. Error: The request could not be performed because of an I/O device error. Stage: Create hard disk or cache store partition.

Now this was clear that there was a underlying hardware issue, and nothing to do with the storage spaces system. The looming culprit was thought to be the backplane and that the port on the backplane was faulty.

New backplane and cables where orderd. The backplane was replaced. And disk added back into the server, and lo and behold the disk joined the storagepool without any issues.

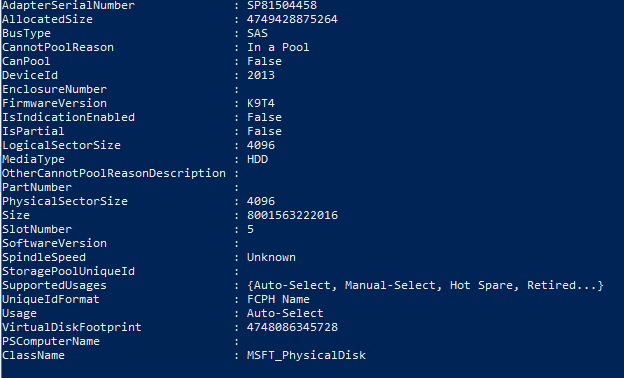

And now the new drive shows as in a pool, as Lenovo sendt another new drive.

So to sumerise this up, if a disk won’t join the pool, check the Failover Clustering Storagebus logs and the system event logs. As they will trigger some warning and error msg’s when it tries to join the disk to the pool. And any I/O error during this process will stop the disk from joining the pool. And is most likely a Hardware issue with the slot on the backplane.

reference: https://jtpedersen.com/2021/08/capacity-disk-failing-to-join-storagepool-on-azure-stack-hci-cluster/

3 thoughts on “Capacity Disk failing to join StoragePool on Azure Stack HCI Cluster”

Glad we could help you!

Thanks for sharing this article, nice job as usual Jan-Tore

Thanks for good help, not always i can say that with MS support. But it’s getting alot better then let’s say 5-10 years ago 🙂